The social influence bias is an asymmetric herding effect on online social media platforms which makes users overcompensate for negative ratings but amplify positive ones. Positive social influence can accumulate and result in a rating bubble, while negative social influence is neutralized by crowd correction.[2] This phenomenon was first described in a paper written by Lev Muchnik,[3] Sinan Aral[4] and Sean J. Taylor[5] in 2014,[1] then the question was revisited by Cicognani et al., who's experiment reinforced Munchnik's and his co-authors' results.[6]

Relevance

Online customer reviews are trusted sources of information in various contexts such as online marketplaces, dining, accommodation, movies or digital products. However, these online ratings are not immune to herd behaviour, which means that subsequent reviews are not independent from each other. As on many such sites, preceding opinions are visible to a new reviewer, he or she can be heavily influenced by the antecedent evaluations in his or her decision about the certain product, service or online content.[7] This form of herding behaviour inspired Muchnik, Aral and Taylor to conduct their experiment on influence in social contexts.

Experimental Design

Muchnik, Aral and Taylor designed a large-scale randomized experiment to measure social influence on user reviews. The experiment was conducted on the social news aggregation website like Reddit. The study lasted for 5 months, the authors randomly assigned 101 281 comments to one of the following treatment groups: up-treated (4049), down-treated (1942) or control (the proportions reflect the observed ratio of up- and down-votes. Comments which fell to the first group were given an up-vote upon the creation of the comment, the second group got a down-vote upon creation, the comments in the control group remained untouched. A vote is equivalent of a single rating (+1 or -1). As other users are unable to trace a user’s votes, they were unaware of the experiment. Due to randomization, comments in the control and the treatment group was not different in terms of expected rating. The treated comments were viewed more than 10 million times and rated 308 515 times by successive users.[1]

Results

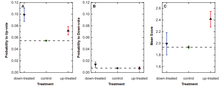

The up-vote treatment increased the probability of up-voting by the first viewer by 32% over the control group (Figure 1A), while the probability of down-voting did not change compared to the control group, which means that users did not correct the random positive rating. The upward bias remained inplace for the observed 5-month period. The accumulating herding effect increased the comment’s mean rating by 25% compared to the control group comments (Figure 1C). Positively manipulated comments did receive higher ratings at all parts of the distribution, which means that they were also more likely to collect extremely high scores.[1] The negative manipulation created an asymmetric herd effect: although the probability of subsequent down-votes was increased by the negative treatment, the probability of up-voting also grew for these comments. The community performed a correction which neutralized the negative treatment and resulted non-different final mean ratings from the control group. The authors also compared the final mean scores of comments across the most active topic categories on the website. The observed positive herding effect was present in the “politics,” “culture and society,” and “business” subreddits, but was not applicable for “economics,” “IT,” “fun,” and “general news”.[1]

Implications

The skewed nature of online ratings makes review outcomes different to what it would be without the social influence bias. In a 2009 experiment[8] by Hu, Zhang and Pavlou showed that the distribution of reviews of a certain product made by unconnected individuals is approximately normal, however, the rating of the same product on Amazon followed a J-Shaped distribution with twice as much five-star ratings than others. Cicognani, Figini and Magnani came to similar conclusions after their experiment conducted on a tourism services website: positive preceding ratings influenced raters' behavior more than mediocre ones.[6] Positive crowd correction makes community-based opinions upward-biased.

References

- ^ a b c d e Muchnik, Lev; Aral, Sinan; Taylor, Sean J. (2013-08-09). "Social Influence Bias: A Randomized Experiment". Science. 341 (6146): 647–651. Bibcode:2013Sci...341..647M. doi:10.1126/science.1240466. ISSN 0036-8075. PMID 23929980.

- ^ Centola, Damon; Willer, Robb; Macy, Michael (2005-01-01). "The Emperor's Dilemma: A Computational Model of Self‐Enforcing Norms". American Journal of Sociology. 110 (4): 1009–1040. doi:10.1086/427321. ISSN 0002-9602.

- ^ Muchnik, Lev. "Lev Muchnik's Home Page". www.levmuchnik.net. Retrieved 2017-05-24.

- ^ "SINAN@MIT ~ Networks, Information, Productivity, Viral Marketing & Social Commerce". web.mit.edu. Retrieved 2017-05-24.

- ^ "Sean J. Taylor". seanjtaylor.com. Retrieved 2017-05-24.

- ^ a b Simona, Cicognani; Paolo, Figini; Marco, Magnani (2016). "Social Influence Bias in Online Ratings: A Field Experiment". doi:10.6092/unibo/amsacta/4669.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Aral, Sinan (December 19, 2013). "The Problem With Online Ratings". MIT Sloan Management Review. Retrieved June 3, 2017.

- ^ Hu, Nan; Zhang, Jie; Pavlou, Paul A. (2009-10-01). "Overcoming the J-shaped Distribution of Product Reviews". Commun. ACM. 52 (10): 144–147. doi:10.1145/1562764.1562800. ISSN 0001-0782.